1. The Dream : Can Machines Understand Language ?

Language is one of the most powerful tools humans have. For decades, scientists and mathematicians have tried to teach machines how to understand and generate human like responses, a field known as Natural Language Processing (NLP).

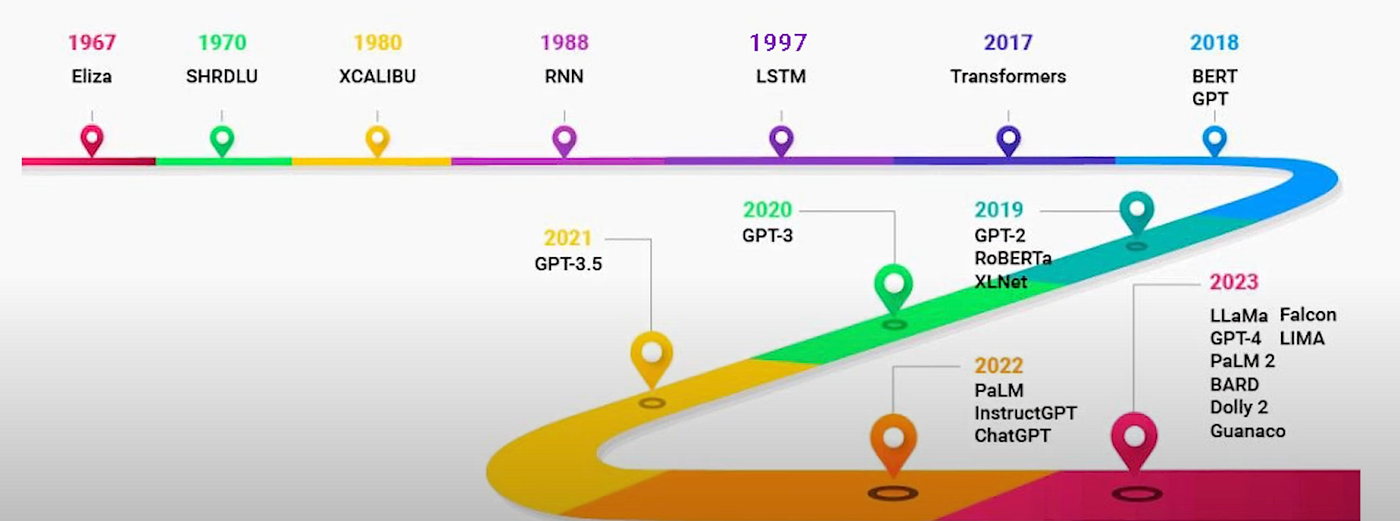

A Brief History

1950

For the first time ever, Alan Turing, known as the father of computer science, proposed the idea "Can machines think ?", it was called the Turing Test.

1966

First Chatbot ELIZA, created by Joseph Weizenbaum, mimics a therapist.

1980s

Rule based language systems start emerging.

2000s

With the help of statistics and data, rule based systems start getting replaced with Machine Learning algorithms, a comparitively new field, that is going to change everything

2017

The Transformers were introduced by Vaswani at Google. This is the model on which all the modern day LLMs work.

2020+

LLMs like GPT-3, GPT-4, Gemini emerge by OpenAI, Google, Anthropic etc.

2. What Is an LLM?

A Large Language Model is essentially an AI system trained on massive amounts of text (like books, websites, and conversations) to predict the next word in a sentence.

That’s right, it’s like super-autocomplete.

If I say, “The sky is…”, an LLM will guess the next word might be “blue.”

By doing this over billions of sentences, the model learns patterns, grammar, facts, and even some reasoning.

3. The Core Idea, Prediction

Let’s try a simple example.

You say: "Once upon a..."

LLM thinks: "time"

You say: "There was a brave..."

LLM thinks: "knight"

This is how it works—predicting one word at a time, super fast and super accurately.

4. Why "Large" Language Model?

It’s called “large” because it has:

Billions of parameters (think of parameters like knobs that the AI tunes while learning).

Trained on terabytes of data, that’s millions of books' worth of text!

Huge computational power—training an LLM can cost millions of dollars and run on thousands of GPUs.

FACT : GPT-3 has 175 billion parameters, GPT-4 is even bigger (exact size not public).

5. Transformers, The Secret Sauce

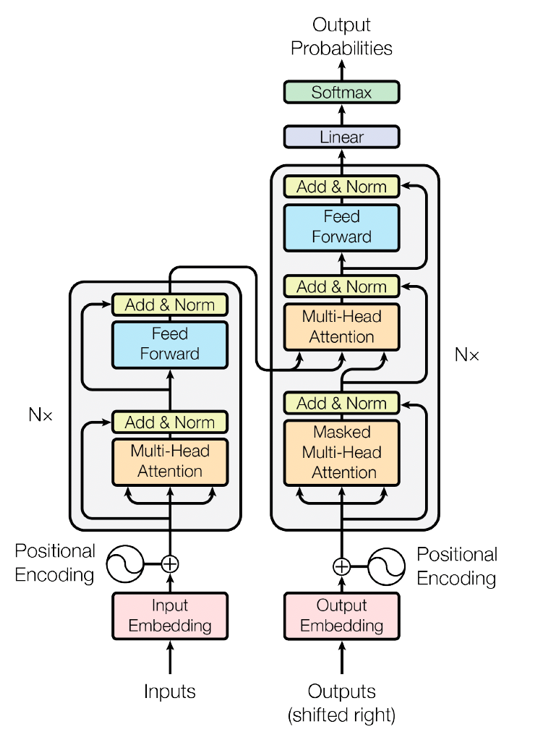

The real breakthrough came in 2017 with a research paper titled “Attention is All You Need” by Ashish Vaswani, a computer scientist at Google.

This introduced the Transformer architecture, which made LLMs possible.

How Does a Transformer Work?

At its heart is a concept called attention, like how you pay attention to certain words when reading.

Example :

In the sentence "The cat sat on the mat because it was tired," what does “it” refer to?

Answer: "cat", The transformer model figures this out using attention.

Image Suggestion 3: Diagram of attention – arrows showing connections between words in a sentence.

Key Components

Tokenization : Breaking sentences into small pieces (words or word parts).

Embeddings – Turning those pieces into numbers (vectors).

Attention Layers – Deciding which words matter most in context.

Output – Predicting the next word based on all this.

Think of it like a super-fast reader that looks at the whole sentence at once, and figures out the meaning, including the most important parts (to determine the context).

6. How Does Training Work?

Imagine you give the AI millions of books and tell it: “Read this and predict the next word.”

Each time it makes a mistake, it adjusts the parameters slightly. Over months of training, it becomes better and better at making predictions just by tuning those knobs.

Analogy: Just like how a kid learns language, by hearing, making mistakes, and improving.

7. Challenges and Future

LLMs are powerful, but not perfect. There is always room for improvements, following are some of the challenges that are being faced currently. (Solving one of these can be a great opportunity into entrepreneurship)

Hallucinations – Sometimes models make stuff up, out of the context which is not needed.

Bias – Since data for training is taken from the vast ocean of internet, models can reflect societal biases in data.

Cost – Models are way too expensive to train and run.

Future and Opportunities

Smaller, faster models on phones.

Multimodal – handling text, images, video.

Personalized LLMs tailored for individual users.

AI agents LLMs that can act and plan, not just chat.